Solutions

Product

Traditional SEO has changed. While Google indexes content faster than ever, the real challenge in 2026 isn't getting indexed. It is getting cited. When a user asks Gemini or ChatGPT a question, the model doesn't just look for keywords. It looks for content that exists in the same semantic neighborhood as the prompt.

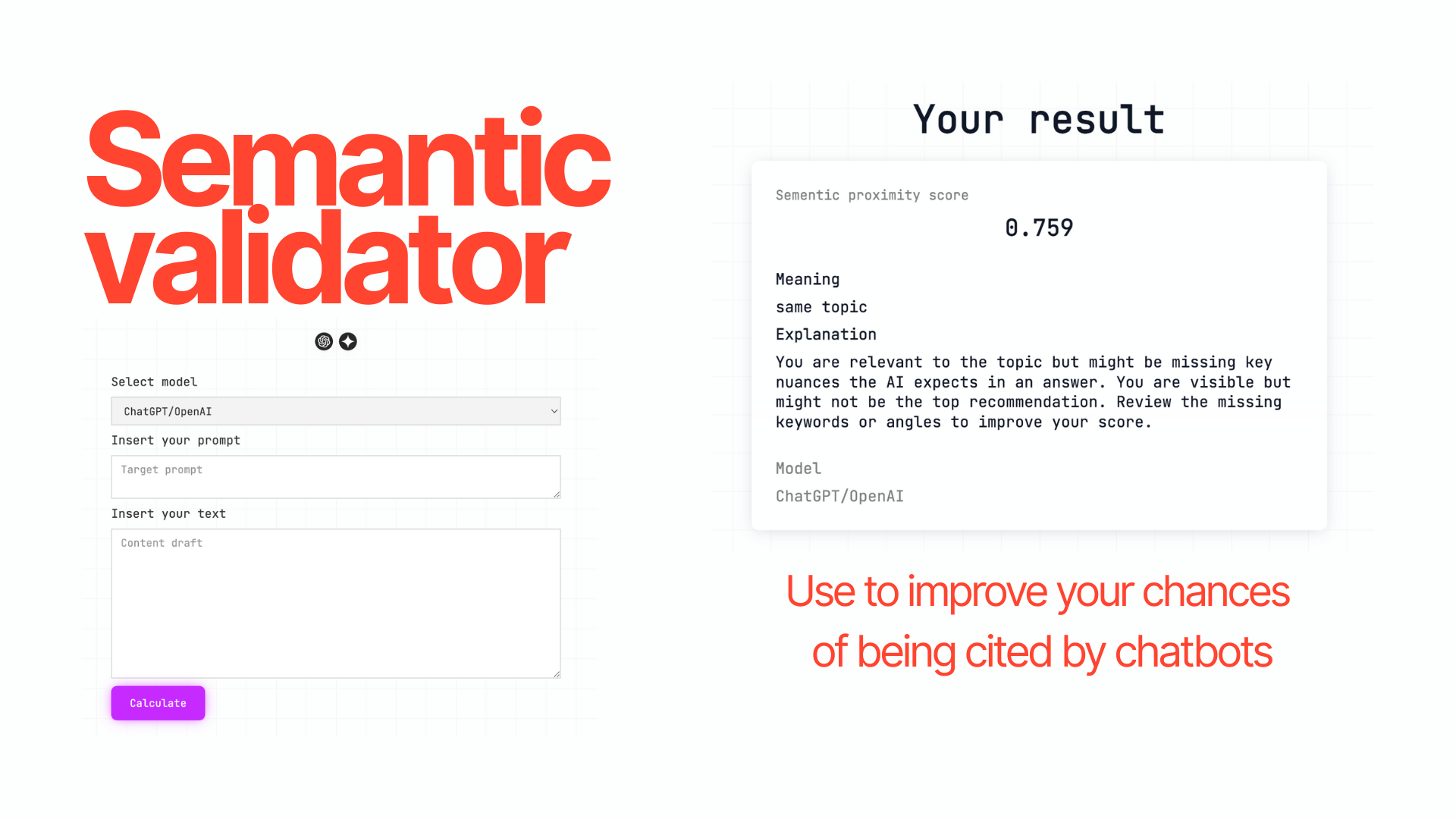

At Turbine Lab, we call this the Semantic Gap. We built the Semantic Validator to measure this gap before you publish your content.

The tool measures the semantic proximity between your draft and a specific prompt. It currently supports models like Gemini and ChatGPT to give you a clear picture of how these LLMs perceive your relevance.

It isn't just about using "natural language." It is about whether your content actually covers the topics and nuances the AI expects to see in a high-quality answer.

We are currently running extensive research on Proximity Scores across different providers and will share those full results soon. For now, we have categorized scores based on how LLMs typically treat content relevance.

This is non-existent in real-world writing. If you see this, the content is a mirror image of the prompt.

Very rare. This shows an extremely high alignment with the model's expectations.

Rare. It shows high semantic alignment. This may be the goal for any high-priority content.

Safe to publish. It's relevant enough to be visible. You can improve it if you want a higher citation chance.

If your score is in this range, the content is too broad or simply unrelated to the prompt. The AI considers your text a "miss" and will prioritize other sources.

No. High semantic proximity is the foundation, but it is not the only factor. The model's final selection for a citation is also influenced by your domain trust, the technical health of your site, the overall semantic scope of the topic, and other factors.

A high score means you have the right "key" to open the door, but the model still checks your credentials before letting you in.

This tool is designed to be a standard part of your editorial workflow. Instead of guessing if a draft is "good enough," your team can now validate it.

If a draft scores below 0.6, it’s a signal to pause and realign. Instead of publishing content the AI might ignore, you can refine the focus until you hit that 0.6+ baseline. It ensures your team’s effort goes into content that actually has a chance to be seen.

The Validator is just one piece of the puzzle. It is part of a broader shift toward understanding your brand's "Semantic Snapshot."

We are moving toward a world where you don't just track individual keywords, but rather the entire semantic space your brand occupies. This tool helps you ensure that every new piece of content you produce is moving your snapshot in the right direction.

GEO (Generative Engine Optimization) is the practice of optimizing content so it is found, understood, and cited by AI models like ChatGPT and Gemini. In 2026, most users get information through AI summaries rather than clicking blue links. If your content isn't optimized for GEO, your brand becomes invisible in the answers these models provide.

The Proximity Score is a metric that tells you how closely your text matches the intent of a specific user prompt. A high score means your content is semantically aligned with how an AI model like Gemini or ChatGPT expects the question to be answered. A low score means there is a "Semantic Gap" between your content and the prompt, making it unlikely that the AI will cite you.

Not exactly. Think of a high score as your entry ticket. It proves your content is semantically "correct" for the query. However, the final mention also depends on your brand’s overall authority, technical health, and domain trust. The Validator tells you that your content is right, but the AI still checks your "credentials" before it decides to cite you.

For most day-to-day content, a score between 0.6 and 0.79 is perfectly fine. It means your draft is relevant, on-topic, and ready to be published. While hitting 0.8 or higher is a great result for high-stakes pieces, it is quite rare and isn't necessary for every post. As long as you stay above the 0.6 mark, you have avoided the "Semantic Gap" and your content is in a good position to be visible.