Solutions

Product

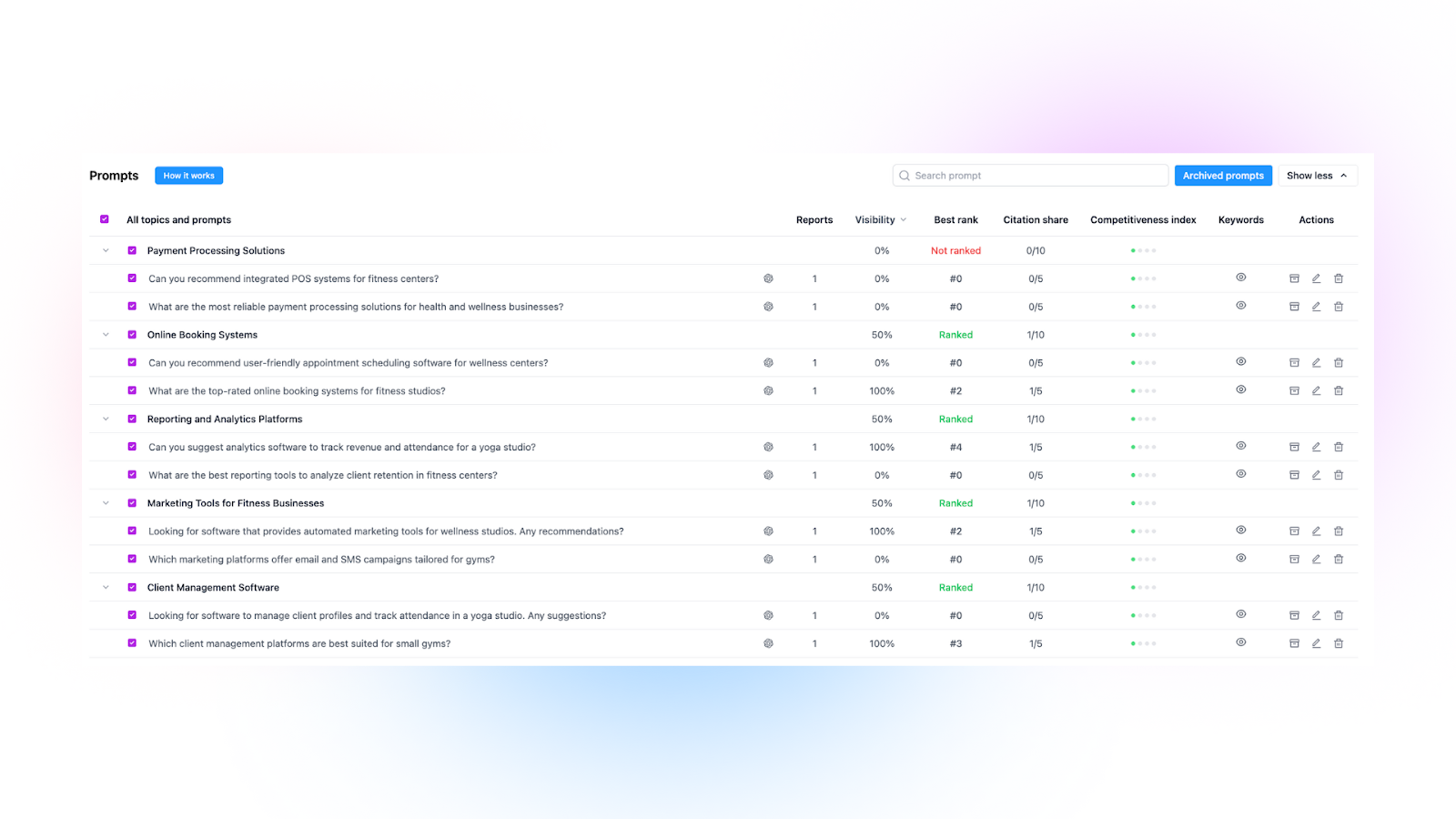

So you’ve just received your first Turbine visibility report. You might be seeing unfamiliar competitors or wondering why your high-ranking SEO pages aren't showing up in ChatGPT.

The rules of the game have changed. This guide breaks down the core logic of AI visibility and how to use your report to dominate the new search landscape.

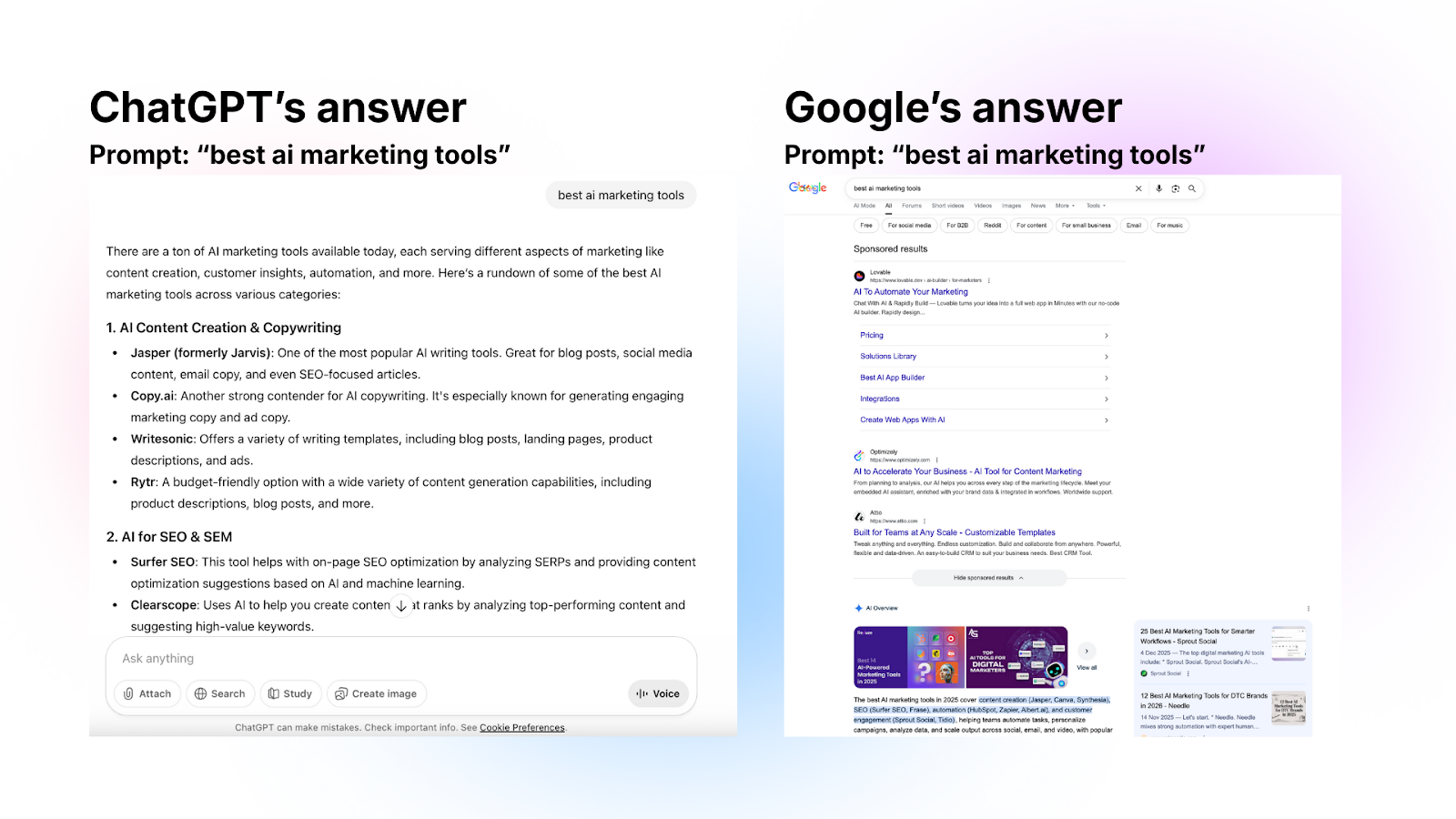

LLMs do not read keywords like old search engines. They work through semantic search.

When a user types a prompt, the LLM transforms it into a mathematical representation of meaning called an embedding. It then looks for content that matches that meaning rather than just matching words.

To win here, you need to stop chasing word counts and start filling semantic gaps. These are the topics and entities your competitors are associated with that you are currently missing.

A common point of confusion is where chatbots get their information. How do they know details that were only on a previous version of my website several months ago? Aren't they just searching the web for answers?

The answer lies in two distinct processes:

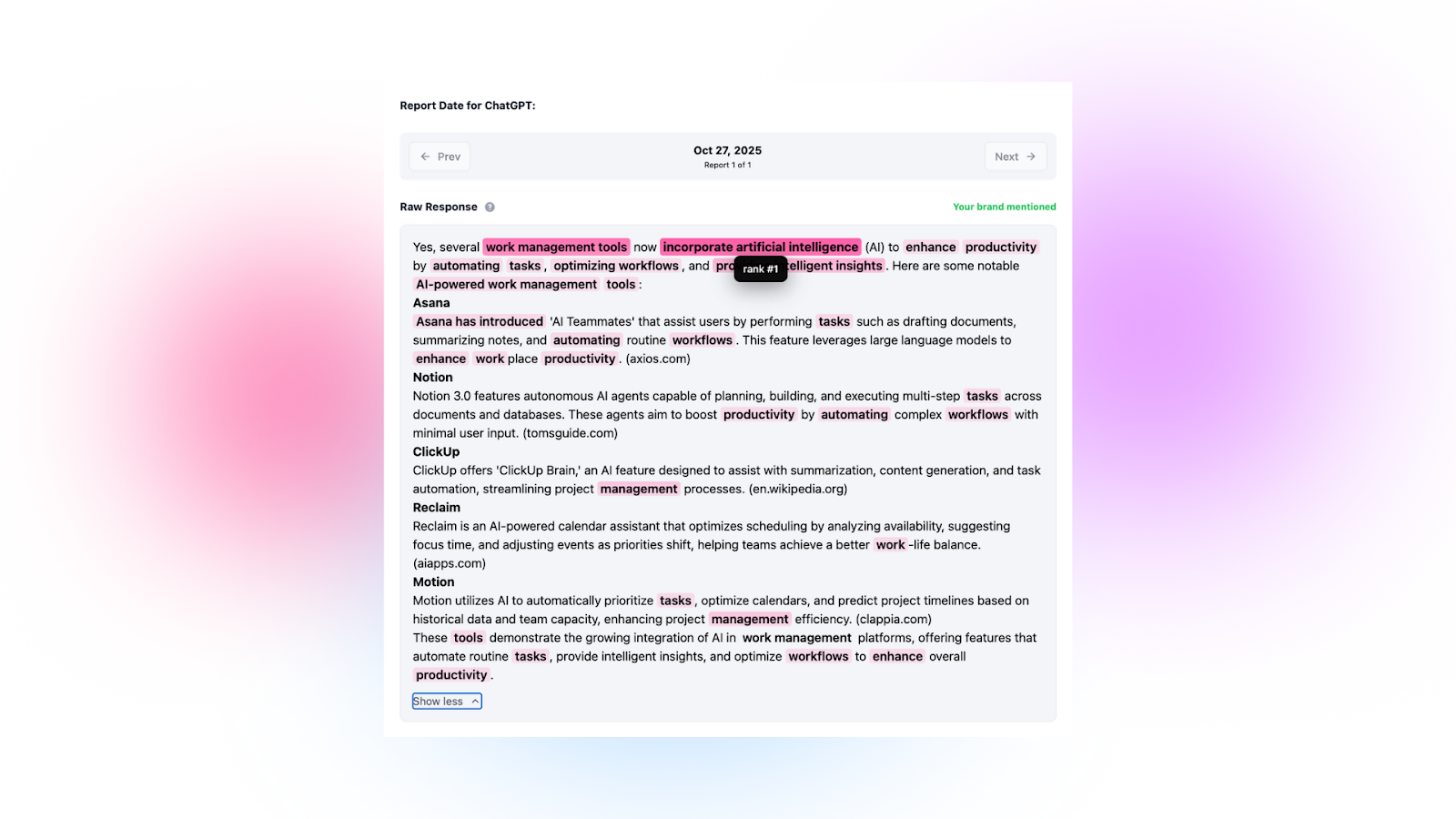

Training: LLMs are trained on massive datasets. If your website was available and crawled months ago, the model already knows your brand features. It pulls from its internal memory first.

Browsing: If the LLM lacks specific details, recent data, or wants to verify its answer, it can trigger a live search. It then provides sources and citations to prove the results it is giving.

Chatbots prioritize trust and relevance. Their decisions are driven by four core factors:

One of the first questions we get is: "How do people actually ask chatbots about my brand?"

There is no public search console for LLMs. Data on exact user prompts is a black box held by OpenAI, Google, and Perplexity. Rather than guessing, use high-probability modeling.

At Turbine, we recommend reconstructing the user journey through three specific layers:

A common fear is that optimizing for LLMs (Generative Engine Optimization) will hurt your traditional SEO.

The answer is no. They are connected, but you can build for the two systems at once.

Think of it this way: SEO gets you onto the library shelf while GEO makes the librarian recommend you by name when someone asks for advice. You can and should do both, but your GEO strategy needs to focus on features LLMs value like structured lists and reviews.

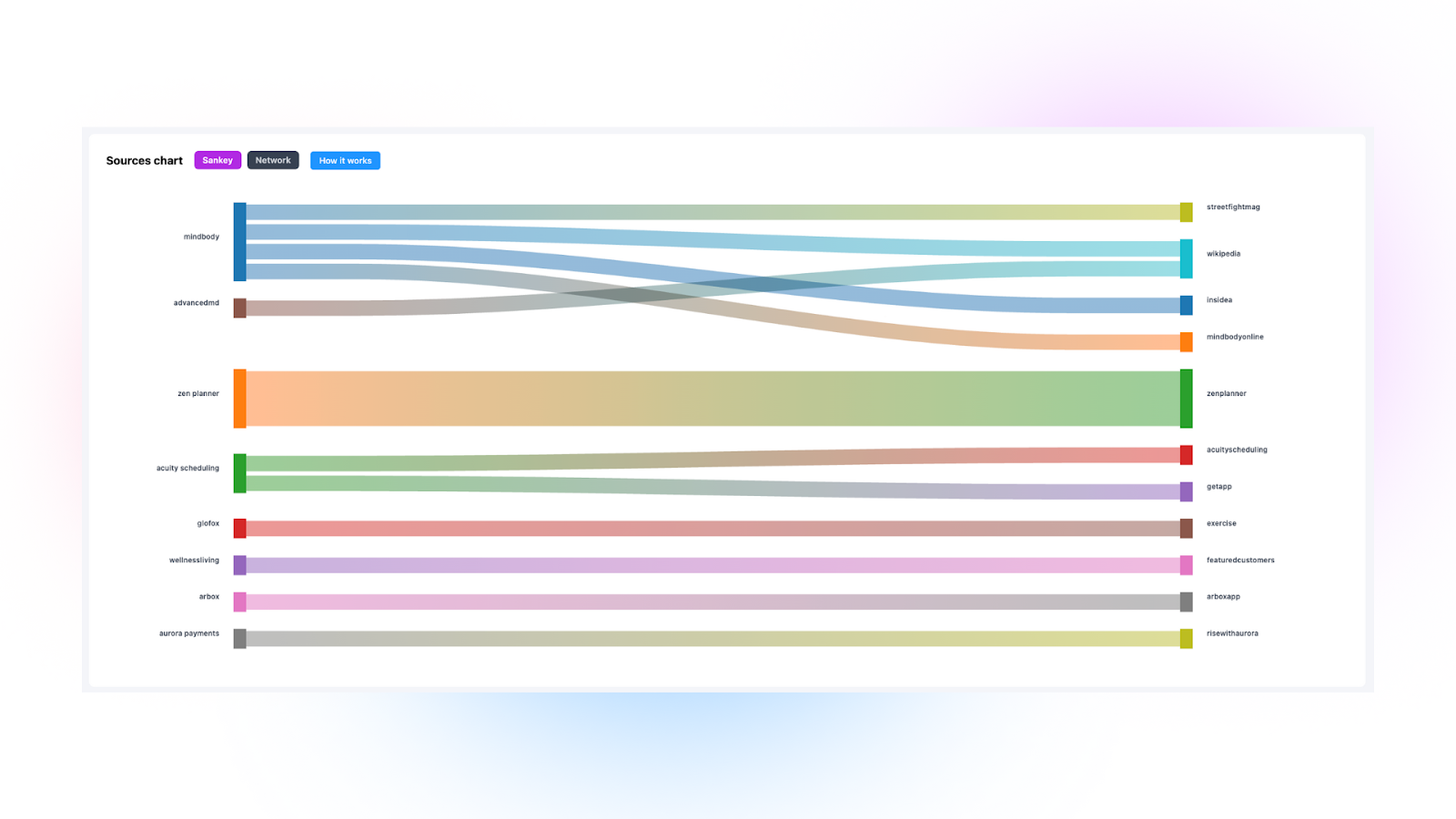

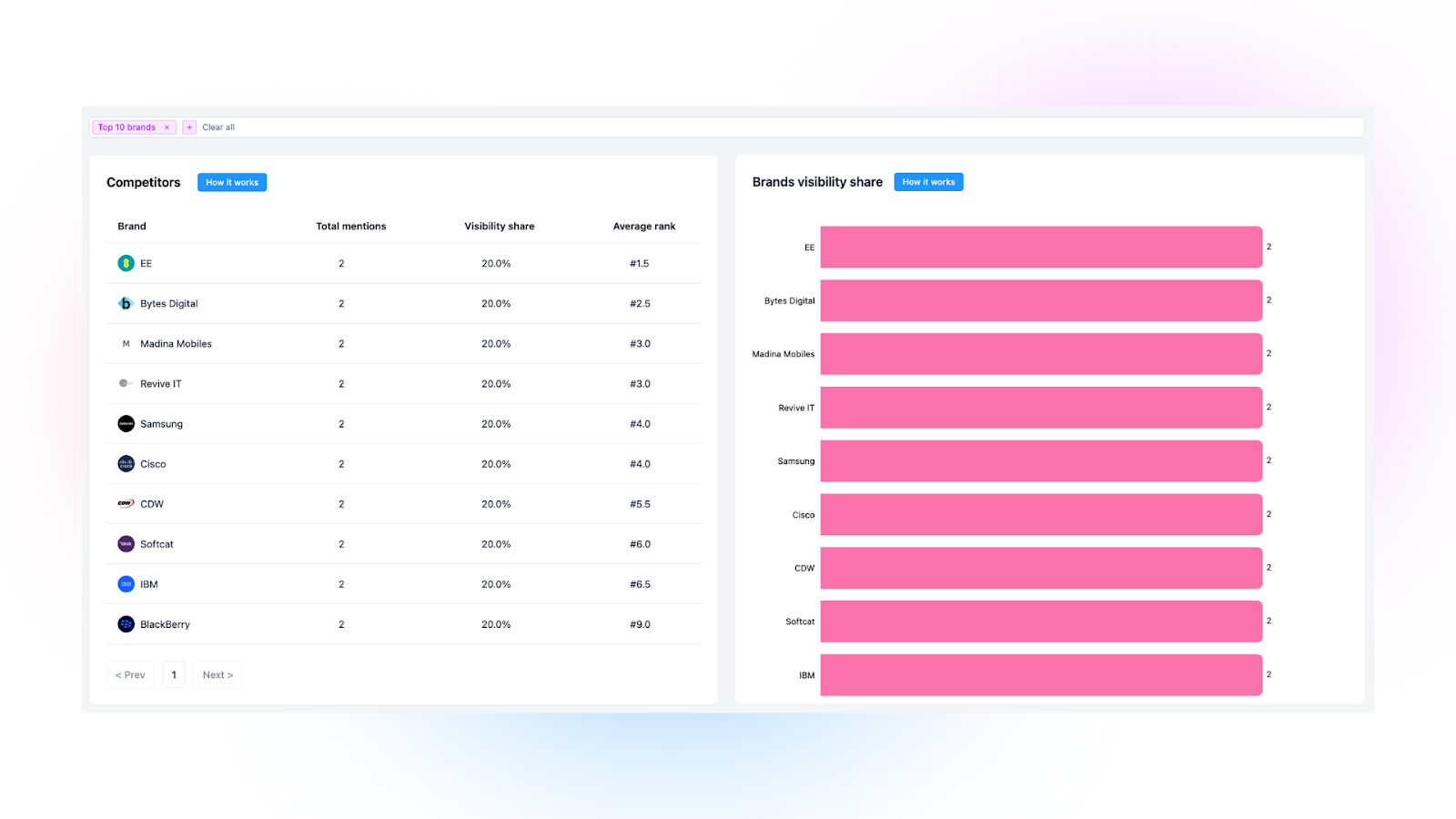

You might see companies in your report that aren't your direct niche competitors. In the world of LLMs, these are indirect semantic competitors.

This is not a mistake; it is a massive opportunity. The LLM sees these brands in the same competitive space as you because they target the same user problems.

The strategy can be simple. If a non-niche competitor is visible, look at where they are being mentioned. By appearing on the same platforms or using their popular content formats, you can capture that visibility for your own niche.

Your Turbine report is more than just a ranking. It is the start of understanding your brand’s semantic bubble. To stay ahead, track your data daily, experiment with new prompts in the Playground, and update or create content based on your findings. Use these insights to pinpoint exactly where you are invisible, bridge your source gaps, and start building the content that LLMs want to recommend. Reach out to the Turbine team if you need help.

A semantic gap is the difference between the keywords you use and the actual meaning an LLM associates with your brand. If a model understands your product but does not connect it to a specific user problem, you have a gap that prevents you from being recommended.

No. Generative Engine Optimization is an evolution of SEO. While traditional SEO helps you rank in a list of links, GEO ensures your brand is understood and cited inside the actual AI response. You need a solid SEO foundation for AI crawlers to trust your site in the first place.

AI models prioritize brands with the strongest proof across the web. If your competitors have more structured data, better headings, or more mentions on high-authority platforms like Reddit or industry news sites, the model sees them as a safer and more relevant recommendation.

LLMs are probabilistic and update their internal logic frequently. Checking your data daily or weekly helps you catch shifts in how your brand is perceived and allows you to respond to new semantic gaps before they become permanent competitive advantages for others.

You cannot pay to be in a chatbot's memory. You influence them by providing clear, structured content on your site and building a footprint of mentions on the external platforms the models use for verification.